When I visit customers all around the world in various industries that manufacture very complex machinery, we always end up spending a lot of time on one thing in particular – getting our heads around how to troubleshoot high-level symptoms that the field service engineers face all the time out there.

I have visited companies that build wind turbines, farming machines, diesel engines, air compressors, networking equipment, and even companies in the silicon industry to train them in formalizing and making explicit all of their tacit know-how on how to fix problems in their products in case of unscheduled downtime.

The thing is, because of the complexity of the products, it can be hard to find out where to start. When asked what they do in the field when troubleshooting a certain issue, most techs will say that they start looking at the diagnostics for error codes or abnormalities, then check the log files, then inspect the configuration settings, and so on – all to get an overview of the situation and figure out where to start digging further. And then it gets really difficult.

Diagnostics can potentially send the tech in one or more directions depending on the level and sophistication of the diagnostics system, and sometimes it will even be capable of identifying potential causes. However, in many cases, the error codes produced by diagnostics are not exactly precise, and the techs must rely on their skills and experience – even though diagnostics has sent them on a path.

Without diagnostics, the techs must rely entirely on their know-how …

So, how do we improve that experience?

Take this example from the wind turbine industry. One of my customers wanted to test the Dezide software, so they introduced a certain common error into a turbine in the test facility. Then they had two teams of technicians resolve the problem. The first team contained only junior techs using the Dezide troubleshooting software, and the other team contained only very skilled senior engineers with many years of experience. The senior engineers resolved the problem in 90 minutes using experience. The junior technicians resolved the problem in 12 minutes using the troubleshooting system …

But how is that even possible? How can junior techs with little training outperform senior engineers like that?

How do we capture the knowledge needed to resolve such complex issues in very advanced products, with great uncertainties and tight deadlines with big performance contracts and customers eager to get their machines back into operation as soon as possible, breathing down the technician’s neck?

Well, we already discussed the benefits of capturing troubleshooting knowledge in a sound mathematical model, and you can read about the details on our blog and white papers. The essence is capturing root causes, specifying their probabilities and repair actions, and setting the time and monetary cost for those actions. The difficult part is working out how to break down the troubleshooting process for a complex error in a large system into those causes and actions.

Let’s look at that next.

Divide and conquer

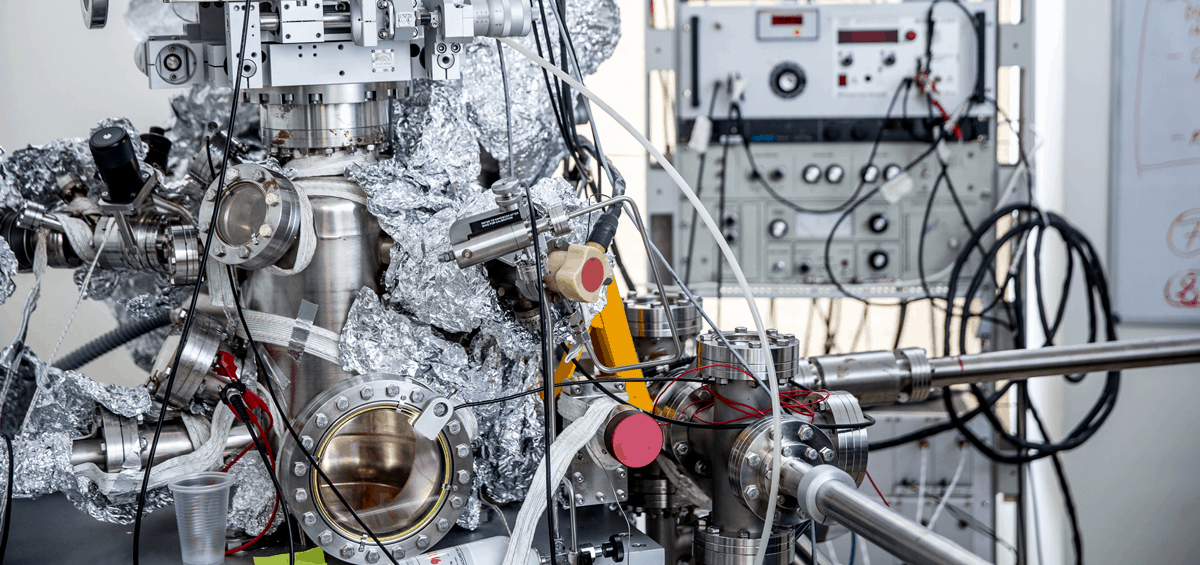

Let’s consider an example from the silicon industry. This company builds tools for manufacturers of CPUs, memory modules, sensors, and the photovoltaic business (like solar panels). These tools are immensely complex – more so than any other products I’ve worked with. Large systems are comprised of a series of chambers, each with their function and application and when something breaks down, it can be like looking for the needle in the haystack in an interconnected web of chambers, cables, chemicals, pressures, electricity, and much, much more. If the technicians look in the wrong place and take something apart to check the innards and it turns out to be the wrong place, the customer is looking at days of downtime.

Trying to capture the entire troubleshooting process for a symptom like the presence of Helium on the backside of a chamber that is not supposed to be there can be daunting even for the experts. Issues like that can be related to pressure issues, electronics, leaks, or the issue could originate from a long list of sub-systems comprising the system as a whole. Previous attempts at building troubleshooting fault trees for solving complex issues like these have been unsuccessful, and too much money has been spent on internal knowledge management projects that have failed.

Component Level Troubleshooting

A better approach is to start from the bottom and up. We should, therefore, look at one sub-system at a time, build one or more troubleshooting guides for that component and then move on to other components. When we are done, we will have several troubleshooting guides individually addressing specific issues. The great thing about this approach is that it’s easier to look at only one component at a time and get all the causes for that component captured. It is easy for the technician only to consider causes for the robotic arm that transfers the product from one chamber to the next in isolation and then moves on to only focusing on the power supply and the manometer and so on in isolation. It’s a divide and conquers strategy that forces us to focus on a given component or module instead of thinking about the overall symptom, which can be overwhelming due to the sheer amount of potential root causes spread over many systems.

That’s all good, but the complexity often lies in the interaction between components, so how do I fit it all together, you might ask?

The trick is to piece together hierarchies of troubleshooting guides that, when combined, become even more powerful than on their own.

Here is how.

Troubleshoot Higher-Level Symptoms

With several individual component-level troubleshooting guides in hand, we can move on to looking at the higher-level symptoms. The component level guides can then be used as “Lego blocks,” and we can build a variety of troubleshooting “super guides” that each handles one or more higher-level symptoms.

The “super guides” will ask a few questions to learn more about the state of the machine and the context of the troubleshooting scenario and then take the engineer to the right place in the machine to start looking very quickly.

Sounds easy, right?

This excavator company has built a super guide called “Excavator cannot start.” This is a very general troubleshooting guide where the technician is in the field at the machine, and it doesn’t give him any error codes; all he knows is that it just won’t start.

The super guide starts by asking the technician a couple of questions to hone in on the issue to take him into the most probable troubleshooting path possible:

“Is the display working when the ignition is turned ON?”

If the technician answers, “Yes, the display is working,” this indicates to the systems that the problem most likely is not related to emergency stops and the electrical system and fuses, so the system lowers the probability of all the causes associated with those types of problems. All the component level guides that handle issues around the electrical systems are then taken out of the equation. The system makes the technician focus on other more likely areas.

The troubleshooter moves on to ask:

“Is the engine cranking?”

If the technician answers, “Yes, the engine is cranking but doesn’t turn on,” it could indicate issues with the fuel system, so the troubleshooter will include the component level guides related to the fuel system.

In a guide hierarchy of arbitrary depth, the guide determines when it is most effective to jump to a relevant sub-guide. The technician doesn’t need to consider this – add a few good questions, and the process becomes even more efficient.

The guides can be started anywhere in the hierarchy. In the example above, the complete guide hierarchy is comprised of a total of six guides that constitute the entire “Excavator cannot start” problem. However, each of those six sub-guides can be used individually to address specific issues. So, suppose the technician is on-site and can tell from either visual indicators, error codes, or other machine state information that he is facing an electrical issue, a fuel problem, or a hydraulic module failure. In that case, he can start the corresponding guide for that issue.

But that’s not all.

If we add IIoT machine state data to the process, then it becomes a lot smarter! But that is a topic for another post …